PRISONER'S DILEMMA

The prisoner's dilemma is only one of many illustrative examples of the logical reasoning and complex decisions involved in game theory. The prisoner's dilemma takes the form of a situation or game where two people must separately make decisions that will have consequences not only for their own self, but also for each other. When stuck in the situation or when playing the game, people confront a dilemma concerning their decisions, because when motivated solely by self-interest, they face more severe consequences than when motivated by group interests, as illustrated below. In order to make the best choice, each player would have to know what the other will do, but the structure of prisoner's dilemma prohibits players from having such knowledge, unless the situation or game is repeated. The prisoner's dilemma also is generally characterized by its lack of a single optimal strategy and the reliance of both parties on each other to achieve more favorable results.

When understood properly, this dilemma can multiply into hundreds of other more complex dilemmas. The mechanisms that drive the prisoner's dilemma are the same as those that are faced by marketers, military strategists, poker players, and many other types of competitors. The simple models used in the prisoner's dilemma afford insights on how competitors will react to different styles of play, and these will reveal suggestions on how those competitors can be expected to act in the future. A plethora of disciplines have studied the game, including artificial intelligence, biology, business, mathematics, philosophy, sociology, and political science.

Based on the game-theory research of Merrill Flood and Melvin Dresher for the Rand Corporation in 1950, Albert Tucker presented their findings in the form of the prisoner's dilemma scenario or game, using it to illustrate the failure of lowest-risk strategies and the potential for conflict between individual and collective rationality. Tucker suggested a model in which two players must choose an individually rational strategy, given that each player's strategy may affect the other player.

In the example, two suspects are apprehended by police for robbing a store. Prosecutors cannot prove either actually committed the robbery, but have enough evidence to convict both on a lesser charge of possession of stolen property.

Both suspects are isolated with no means of communication and offered an opportunity to plea bargain. Each is asked to confess and testify against the other. If both prisoners refuse to confess, they will be convicted of the lesser charge based on circumstantial evidence and will serve one year in jail. If both confess and implicate each other, they will be convicted of robbery and sentenced to two years in jail.

If, however, prisoner A refuses to confess while prisoner B confesses and agrees to testify against A, prisoner B will be set free. Meanwhile, prisoner A may be convicted on the basis of prisoner B's testimony and be sentenced to six years in jail. The reverse applies if prisoner A confesses and prisoner B remains silent.

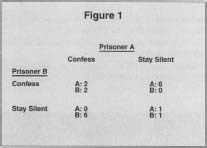

The choices available to the prisoners, and the consequences for those choices, may be represented in the matrix shown in Figure I (numbers are years sentenced to jail).

If the prisoners hope to avoid spending six years in jail, and are willing to risk serving two years to guarantee this, they will be motivated to confess. A confession for either will ensure serving no more than two years, regardless of what the other does.

This strategy of confession is called a dominating strategy because it yields a better outcome for the prisoner—in this case, avoiding a six-year jail term—regardless of what the other prisoner does. It is also known as the "sure-thing" principle, because the prisoners who confess know for certain that they will not serve more than two years.

But where the dominating strategy of confession is individually rational, an even more optimal outcome may be gained from a strategy that is collectively rational. For example, if prisoners A and B can be assured that neither will confess, and both are willing to serve a year in prison as a result of this decision, they will be motivated not to confess.

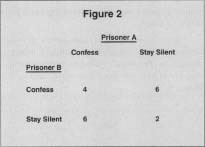

This strategy, which yields the prisoners the lowest total number of years in jail, two, is called a cooperative strategy. The matrix in Figure 2 illustrates collectively optimal choices. It repeats the choices presented earlier, but shows the total number of years that will be served by both in each instance.

Obviously, if the two prisoners are very loyal and refuse to implicate the other, they will both opt to remain silent and minimize the number of years they both must serve. In order to achieve this outcome, the two prisoners must have an agreement that is reasonably enforceable and effective or sufficient confidence in each other.

If the agreement is not effective, the prisoners may be motivated to adopt the dominating strategy because they can improve their situation by relying on it. One prisoner may be allowed to go free, while the other partner spends the next six years behind bars, or they both may spend one year in prison.

Indeed, one of the prisoners may be motivated to construct an agreement not to confess, specifically to cheat his or her partner and ensure his or her own freedom. This scenario demonstrates how uncooperative play can subvert cooperative strategies, and why knowledge is absolutely essential to making an individually optimal decision.

The prisoner's dilemma provides even more insights when examined in a series of cases where the dynamics change. For example, assume that prisoners A and B are arrested and A stays silent while prisoner B confesses, after both had agreed not to confess if caught. Prisoner B goes free while prisoner A marks the next six years in jail. Clearly, prisoner A has misjudged prisoner B.

Meanwhile, prisoner B returns to a life of crime and is picked up under identical circumstances with another partner, prisoner C. Prisoner C is aware of what B did to A last time, and has no intention of remaining silent, because C knows B cannot be trusted and C does not want to join prisoner A for six years.

Prisoner C possesses something A did not: superior knowledge about how prisoner B might act. Prisoner B knows prisoner C has this information, and like C will be motivated to confess. Hence, both are sentenced to two years in this scenario.

The fact that the game is repeated, or iterated, affords the players indications of each other's style of play based on past performance. By allowing opportunities for retribution, iterated play provides indications of how players will interact and how they will react to the consequences of uncooperative strategies.

For example, prisoner B would be well advised to retire from crime or to take considerable pains not to get caught, because B's past actions are likely to doom the chances that another partner who knows prisoner B's history will ever cooperate with prisoner B.

Iterated play against a programmed player—one whose decisions are predictable—will indicate how a player will react to opportunities to exploit the other. Assume that a player named Bob merely repeats the moves of another named Ray. Ray knows that whatever he does, Bob will do on the next move. Therefore, if Ray takes advantage of Bob on one move, Bob will reciprocate on the next move. This destructive cycle will continue until both can be convinced that they would benefit more from a cooperative style of play.

For example, if Bob and Ray are prisoners A and B, they might realize that repeatedly confessing against each other in a series of crimes is causing more damage than if they were to cooperate. This is illustrated in the tables, where both get two years if they confess, but only one if they cooperate and stay silent.

Examples of iterated play show that, as long as the benefits of cooperation outweigh the benefits of antagonism, players will eventually adopt a cooperative style of play. Both players will decide to cooperate because, over a series of repeated games, collective rationality becomes analogous to individual rationality.

Computer simulations of the prisoner's dilemma game have resulted in the discovery of what could be the optimal strategy for the game: the "tit for tat" approach. This strategy calls for playing cooperatively at first. But when the other player plays selfishly, it recommends reciprocating the opponent's moves. This research demonstrated that the tit for tat approach produces better results than the competing "golden rule" strategy, which stipulates that players make their decisions based on what they would like other players to choose.

APPLICATIONS OF THE PRISONER'S

DILEMMA

The prisoner's dilemma may be extended to competitive market situations. Assume for instance that there are only two grocers in a given market, grocer Bill and grocer Mary. Bill decides to attack Mary by undercutting her prices. Mary reciprocates by matching the price cuts. Both forsake profits, and even incur losses, hoping to force the other into submission.

Finally, Bill gives up and raises his prices. Mary who can no longer afford underpricing Bill, raises her prices as well. Now neither is at a disadvantage. They have reached a cooperative agreement after learning that the consequences of antagonism are mutually deleterious.

As this example shows, business competition often involves the tit for tat strategy. Businesses begin by "playing" cooperatively and setting their prices with reasonable profit margins. After that, they match their competitors' last move. Hence, they offer discounts if their competitors offer discounts and added value if their competitors offer added value. Unlike the example, however, businesses hope that their competitors will realize they cannot "win" unless they cooperate before they begin to suffer losses. Consequently, the prisoner's dilemma suggests that business may profit more from being less competitive (in terms of price at least) and less from being more competitive.

A real-world example of the extended prisoner's dilemma exists in the fishing industry, where the rate of catches by fishers has increased faster than the ability of the fish to reproduce. The result is a depleted supply that has caused every fisher greater hardship.

The individually optimal strategy for fishers is to cooperate with each other by restraining the volume of their catches. Fishers forgo higher profits in the near term, but are assured of protecting their livelihood in the long term.

These examples directly contradict the accepted principle in economics that the individual pursuit of self-interest in a freely competitive market yields an optimal aggregated equilibrium. They demonstrate the application of diminishing returns to a finite resource.

The claim that cooperative strategies will prevail over uncooperative ones in iterated games gained support from a most unusual source: theoretical biology. Scientists concerned with evolutionary dynamics postulated that species that fight to the death work their way toward extinction.

It seems elementary that an animal determined to kill others of its kind would eventually cease to exist. Although contests within species are common, particularly in the selection of a mate, they often do not result in the death of one of the opponents. These contests reward the winner with a mate and reward the loser with survival for accepting defeat and walking away.

Assuming that the male and female of a species are produced randomly in equal numbers, repeated situations in which two males fight to the death for a single mate will yield a population in which females will outnumber males. If these deaths limit the reproduction of the species because of a lack of males, the population growth of this species will be retarded.

If two males under the same conditions fight only for supremacy, rather than to the death, the loser may prevail in another contest with another yet weaker opponent, and still be allowed to reproduce. Thus, nonlethal combat may be viewed as cooperative in the collective sense.

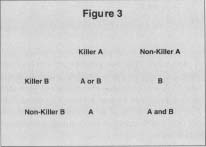

The prisoner's dilemma becomes relevant in evolutionary biology when one constructs a matrix to analyze the outcomes of contests between animals that are killers and those that are nonkillers (see Figure 3).

A single killer will prevail in three of the four contests, while two nonkillers will survive in one of the four. In iterated play, the killers will eventually destroy all the nonkillers. In a population left only with killers, successive contests between killers will yield fewer and fewer killers until the species cannot sustain itself.

Meanwhile, populations in which there are no killers will suffer no decrease in the male population due to lethal contests. A roughly equal distribution of males and females will remain, and the animals will pair off and reproduce the species. This example once again proves that cooperative strategies are dominant in iterated play.

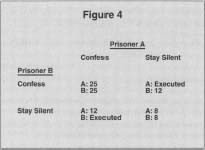

One instance where uncooperative play may benefit a player is in the case where the highest penalty is removal from the game. For example, if the two prisoners are implicated in a murder, but prosecutors can't determine who was the hit man, they may offer a plea bargain (see Figure 4).

If both confess, each gets 25 years. If they refuse to cooperate, they each get eight years. But, if prisoner A testifies that B actually did the murder, and B remains silent, prisoner B will be executed. While extreme, such a model by its nature precludes iteration because prisoner B will be dead.

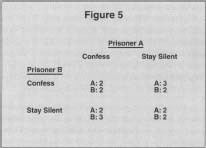

Another case where uncooperative strategies may prove dominant is where the penalties to each player are insufficient to provide cooperative motivations. Consider the following example in Figure 5.

Here, prisoners A and B are indifferent about confessing or staying silent because in either case they would get two years. But each is aware that if he remains silent while the other agrees to implicate him, he might face a third year in jail. Thus, in order to avoid the heavier penalty, both will adopt an uncooperative defensive strategy and confess.

The prisoner's dilemma may be extended to contests between more than two players. Assume for example that three prisoners, rather than two, are apprehended for robbery. Each prisoner must now weigh the possible outcomes of cooperation and antagonism with two counterparts. This scenario may be represented in a three-dimensional matrix with eight, rather than four possible outcomes.

If there was a fourth partner, the matrix would require a fourth dimension, or array, with 16 possible outcomes. The number of outcomes in a multiple-player dilemma may be expressed as a formula: (C)', where C is the number of choices available to each player and n represents the number of players.

Tests with multiple-prisoner dilemmas support the position that cooperative strategies remain individually optimal, particularly in iterated play.

[ John Simley ,

updated by Karl Hell ]

FURTHER READING:

Felkins, Leon. "The Prisoner's Dilemma." 5 September 1995. Available from www.magnolia.net/l-eonf/sd/pd-brf.html .

Johnson, Robert R., and Bernard Siskin. Elementary Statistics for Business. PWS Pub. Co., 1985.

Maynard Smith, John. Evolution and the Theory of Games. Cambridge: Cambridge University Press, 1982.

Poundstone, William. Prisoner's Dilemma: John Von Neumann, Game Theory, and the Puzzle of the Bomb. New York: Anchor, 1993.

Rapoport, Anatol, and Albert M. Chammah. Prisoner's Dilemma: A Study in Conflict and Cooperation. Ann Arbor, Ml: University of Michigan Press, 1965.

Spector, Jerry. "Strategic Marketing and the Prisoner's Dilemma." Direct Marketing, February 1997, 44.

Comment about this article, ask questions, or add new information about this topic: